At 2:14 a.m. Eastern time on August 29th, 1997, Skynet became self-aware.

— The Terminator (1991)

When ChatGPT first arrived on the scene last November, any number of scare-mongering articles appeared almost instantly, all sensationally proclaiming that a crazed, self-aware AI unleashed on the unsuspecting world could, at a stroke, wipe out humanity.

Then, a month or so later, another set of pundits (and tech executives) leaped up to say, no, RELAX, that’s simply not possible, ChatGPT is not self-aware.

Folks, Skynet is possible. Today. Right now.

Remember, AI is Really Just About Math

While there are lots of ways to “do AI,” the most common approach relies on the neural network, a software technology that emulates how neurons work in the human brain. Through a process called training, the program learns how to do tasks.

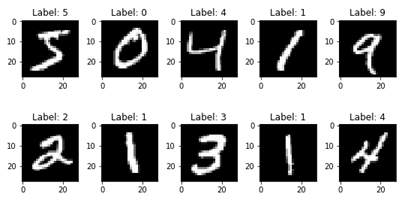

Here’s a simple example. Pay attention, this is important. Let’s say you have 10 hand-scrawled numerals, zero through nine, each on a 28×28 grid of pixels, like this (AI professionals and those in the know will recognize the industry-standard National Institute of Standards MNIST dataset):

A neural network to recognize these numerals uses “neurons,” which use a set of mathematical equations to determine which number is represented by a particular set of pixels.

The details are more complicated but think of it like this: the training results in a huge mathematical equation; think of y= w1x1 + w2x2+ w3x3+…+ b.

Remember high school algebra? The result y (the number it thinks the pixel pattern is) is calculated by multiplying the inputs (that is, the pixel values: x1, x2, x3) by a set of weights (w1, w2, w3) and then corrected by a bias (“b” in the equation, just an arbitrary number to make the answer come out right).

Weights and biases. Hang on to that thought.

Now, a very simple neural network that recognizes just these ten scribbled numbers requires 13,000 weights and biases which of course no human could ever come up with independently: which is why we have automated training to calculate them. (At its core, the concept is trivial: pick some numbers for the weights, see if they work, if they don’t adjust them. How you figure out the adjustment is the subject of immense amounts of math.)

ChatGPT’s neural networks use something like 175 billion weights and biases.

But it’s still just math.

But If It’s Just Math, How Does Skynet “Achieve Self-Awareness?”

Who needs self-awareness?

Well, we do, obviously, and a rather depressingly large number of us lack it.

But modern AI technology does not require self-awareness to destroy the human race.

The war in Ukraine, fought largely by drones, provides a hint of what could go wrong. Let’s assume that the drones have enough intelligence (that is, trained AI programs) to follow GPS coordinates to a location, to stay on course despite weather, avoid obstacles and perhaps even electronic interference. In other words, each drone is an independent AI machine.

Now this is different from the Skynet scenario in which the bad robots are centrally controlled by an uber-intelligent supercomputer. Instead, drones and cruise missiles and other sorts of autonomous weapons are completely independent once launched. Skynet is decentralized.

Let’s say that somebody somewhere programs a drone to attack soldiers wearing a particular sort of uniform – pretty easy to do. You train the AI with ten or twenty thousand images of people in different sorts of clothing until it accurately recognizes those uniforms. Then you install that trained AI (now just a small piece of software) into your drones.

But…

What if There’s a Bug? Or Worse…

We know this can happen. There are numerous examples of facial recognition and other sorts of AIs that fail because they were not trained on different ethnicities, or women, or (in speech recognition) accents.

So it’s easy to imagine the poorly trained drones attacking anybody in any uniform, or anybody wearing a green shirt. Or anybody.

Or…

What if someone futzes with those weights and biases we talked about before? That is, hacks the neural network at the heart of the AI? Now predicting the results of such a hack would be pretty hard…but almost certainly would lead to the wrong things being attacked; maybe instead of “soldiers” simply “people.” Or anything moving. Or – you get the idea.

Just Make This One a Zero and See What Happens

Of course, it’s pretty hard to imagine someone knowing exactly which of the 175 billion parameters need tweaking. (On the other hand, not impossible either, and maybe not at all hard for software.) But less unlikely are simply random changes that produce unexpected, unwanted, and possibly catastrophic results.

Whether it be via poor training or malicious hacking, it’s clear that “bad” AIs unleashed upon the world could have some pretty scary consequences.

Enjoy your Judgment Day.

Comments are closed.