Barry Briggs

I recently experienced the completely horrifying realization that I’ve been writing code for half a century. It occurred to me that over the years I’ve actually learned some stuff possibly worth passing on, so this article is first in an occasional series.

I’ve had the privilege (and it has been a true privilege!) to meet and work with many of the amazing individuals who shaped this remarkable industry, and to work on some of the software applications that have transformed our world.

It’s been quite a journey, and over the years I’ve come to realize that I started it in a way that can only be described as unique; indeed, I doubt that anyone began a career in the software industry in the same way I did.

So the first edition of “I Learned About Computing from That” is about how I actually first learned about computers.

Early Days

I wrote my first program in BASIC in high school on a teletype (an ASR-33, for old-timers) connected via a phone modem to a time-sharing CDC mainframe somewhere in Denver. As I recall it printed my name out on the attached line printer in big block letters.

I had no idea what I was doing.

In college, I majored in English (really) but maintained a strong interest in science, with several years of physics, astronomy, math and chemistry, even a course in the geology of the moon. Yes, it was a very strange Bachelor of Arts I received.

In short, I graduated with virtually no marketable skills.

Luckily, I found a job with the federal government just outside Washington, D.C. where I was almost immediately Bored Out Of My Mind.

However, I soon discovered a benefit of working for Uncle Sam: a ton of free educational opportunities. A catalog listed page after page of in-person and self-paced courses, and thank God, because I desperately needed something to stimulate my mind.

Digital Design

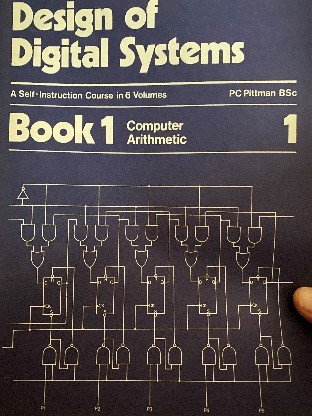

Having no idea what the subject actually was, other than it sounded Really Cool, I chose a self-paced course called “Design of Digital Systems,” by PC Pittman (original publish date: 1974).

This utterly random decision changed the course of my life forever.

These six paperback booklets (I still have a few of them) began with different number bases (binary, octal, digital) then introduced the concept of Boolean logic – AND and OR gates, and so on. It was hard!

After that it covered half-adders, more complicated gates (XOR) and eventually got to memory and – gasp! – the idea of registers.

Finally, it described the notion of CPUs and instructions, how you could actually have a number stored in memory when loaded by the CPU would cause another number to be fetched from memory and added to the contents of a register, leaving the sum in the register: the essence of a program! (To this very day!)

O the light bulbs that flashed on for me!

I suddenly got it, how – at a foundational level – computers work, and how they could be made to do useful things. For me at that point, everything else – assemblers, high-level languages (I took a FORTRAN programming class next), operating systems – fell out from this very profound gestalt of computing.

And I realized I loved this stuff.

They Teach Programming All Wrong Today

These days in high school students can take “computer science” classes which, at least in my son’s case, turned out to be nothing more than a Java programming class. Imagine being thrown into the morass of Java – classes, namespaces, libraries, variables, debugging, IDEs – with no understanding of what the heck is actually going on in the guts of the computer itself!

Completely the wrong approach! Guaranteed to confuse the hell out of a young mind!

As accidental as it was, I highly recommend my approach to learning the art of programming. Teach kids the logic underpinning all of computing first. By developing a deep understanding of what’s actually going on at the digital logic level you develop an intuition of what’s going on, which makes so much easier to write code and to figure out what’s going wrong when it breaks.

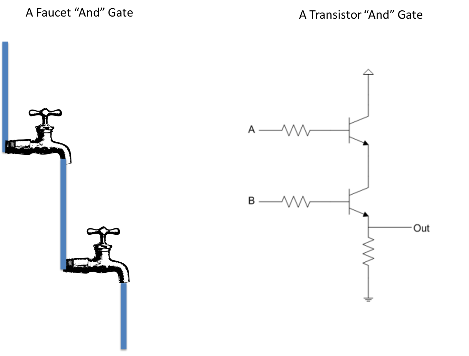

When I talk about how computers work, I start with a faucet (transistor) and tell people if you get how it works, you understand how computers work.

String a couple of faucets together and you get an AND gate. You get the idea. And so on and so on.

Anyway …

That’s how I got started. Shortly after my introduction to Digital Design I wound up taking (more formal) courses at George Washington University and Johns Hopkins (again courtesy of the US government) and not long thereafter this English major (!) found himself programming mainframe OS code for NASA (believe it or not).

Where I learned some very important lessons about coding. That’s for next time. Stay tuned!

Comments are closed.